Schedulers and the Need for Concurrency

To get best computational efficiency from a multicore processor core kernels of operating systems uses Kernel-Level threads to provide concurrency

To get best computational efficiency from a multicore processor core kernels of operating systems uses Kernel-Level threads to provide concurrency

To get best computational efficiency from a multicore processor core, kernels of operating systems uses Kernel-Level threads to provide concurrency. However, to get most out of the threads, OS has User-Level threads which can be used by programming languages to achieve multithreading and control is with users to handle them.

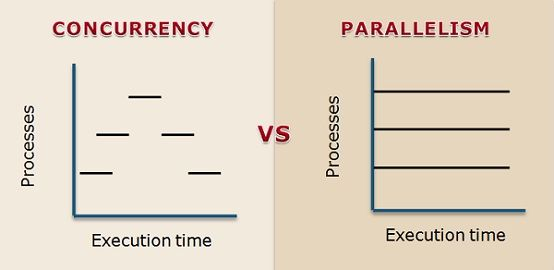

The need of computational efficiency have been increasing and so does the need for concurrency. But before discussing the need of concurrency let's first discuss the difference between concurrency and parallelism.

When we talk about concurrency, we need to talk about handling multiple task together but when we talk about parallelism, it's about executing those multiple tasks together. OS's kernel threads are not up to the developer to handle they are handled directly by operating system on its own and handling of task scheduling on these threads.

Programming languages have allowed developers to use user level threads which can be managed by the program. A thread needs to be spawned and then it can run, wait or suspend which can be explicitly controlled by the developers the way it is programmed. This allows task preemption on threads and tasks to be loaded or unloaded from execution state.

OS's thread preemption is a good to execute and distribute your tasks over the processor, however the preemption is costly between threads. This Context-Switching between the threads adds latency to the execution. However, programming languages have been evolving themselves to overcome this. In general, we see 2 paradigm's in current major programming languages to handle this.

The Async-Await async-await (Eventloop) way to handle better preemption is used in

Lightweight Threads: used in

Specially, when talk about Java's reactive programming library, it tries to emulate eventloop, but they are based on threads only. Let's discuss the different styles of evolving programming language efficiency of preemption in race of Need for Concurrency.

In in this type of concurrency task handling , the code structured in a way such that the operation can be suspended in the sequence of flow and can be blocked using await statement. So basically the developer would split their code into different functions which can be executed concurrently.The internal framework of the programming language puts these structured functions into an event queue. On this event queue, scheduling is done such that a task get executed one by one. Whenever there is any async operation is encountered, task is again push to this queue.

Generally a good split of code can be decided by the operation which are blocking calls: I/O or Network call or File Operation. All these operations are blocking in nature and system OS calls required to perform them blocks the current thread.

This way the flow of execution of code does not have to wait for the next statement to execute, whenever the blocking operation is complete, asynchronous nature will allow the scheduled task to return back to execution state with context.

So this way the CPU thread would not need to get blocked and it's the programming language which made it possible by preempting the tasks conditionally. The executing thread never goes to weight state in there is no need for preemption by OS.

These threads are the newer thread implementation of executors itself within programming language framework which would use underlying threads to achieve efficient concurrency. Also, in some languages they are also called as Virtual threads.

Here, this lightweight thread construct handles the execution of tasks over the OS level provided threads. This custom implementation of scheduling task follow their own algorithm to pick and distribute tasks on each existing thread.

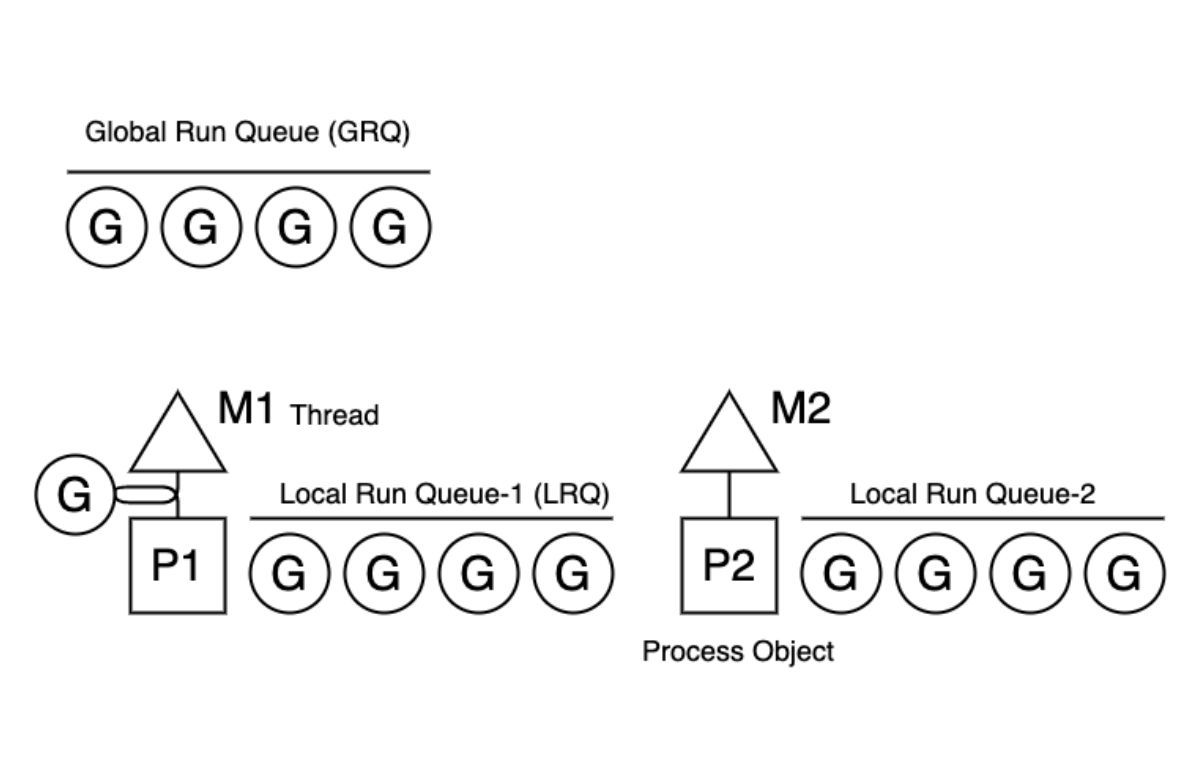

Golang has the option of running this parallel runnable tasks into lightweight threads which are called Goroutines and executable runnables are defined as functions with special keyword go and executions are handled by golang's internal scheduler.

Golang would spawn fix a number of threads based on GOMAXPROCS variable or by default current processors number. It maintain a local run queue and global run queue of Goroutines. Any new runnable Goroutines are added to one of the threads local run queue and that's how it gets scheduled for execution.

However, that's not it. Whenever, there is a blocking operation inside a Goroutine, the scheduler will preemt this execution and allows next Goroutine to execute avoiding blocking the underlying execution for thread efficiency.

The suspended Goroutine can be queued to any of the available process object's local run queue. This way of switching where same Goroutine can be executed among multiple threads is also called Cooperative Scheduling.

Another caveat in the golang's scheduler is the Work-Stealing nature of virtual threads. Whenever process is free of any queued Goroutine they can steal queued routines from other local or global queue. Or also from Network Poller in predefined way. This way task balancing is done and none of the threads gets overloaded by Goroutines run queues.

Kotlin routine uses continuation steps, in which each step is a structured execution fragment that can be preempted. Here, the lightweight routines are basically finite state machine that uses these defined steps. Continuation steps are defined by the developer which in turn are implicitly handled by Kotlin's compiler. So whenever a blocking step is encountered, the continuation step is suspended and the next step can be queued again for execution and the suspended step when completes its blocking operation can be queued back for execution.

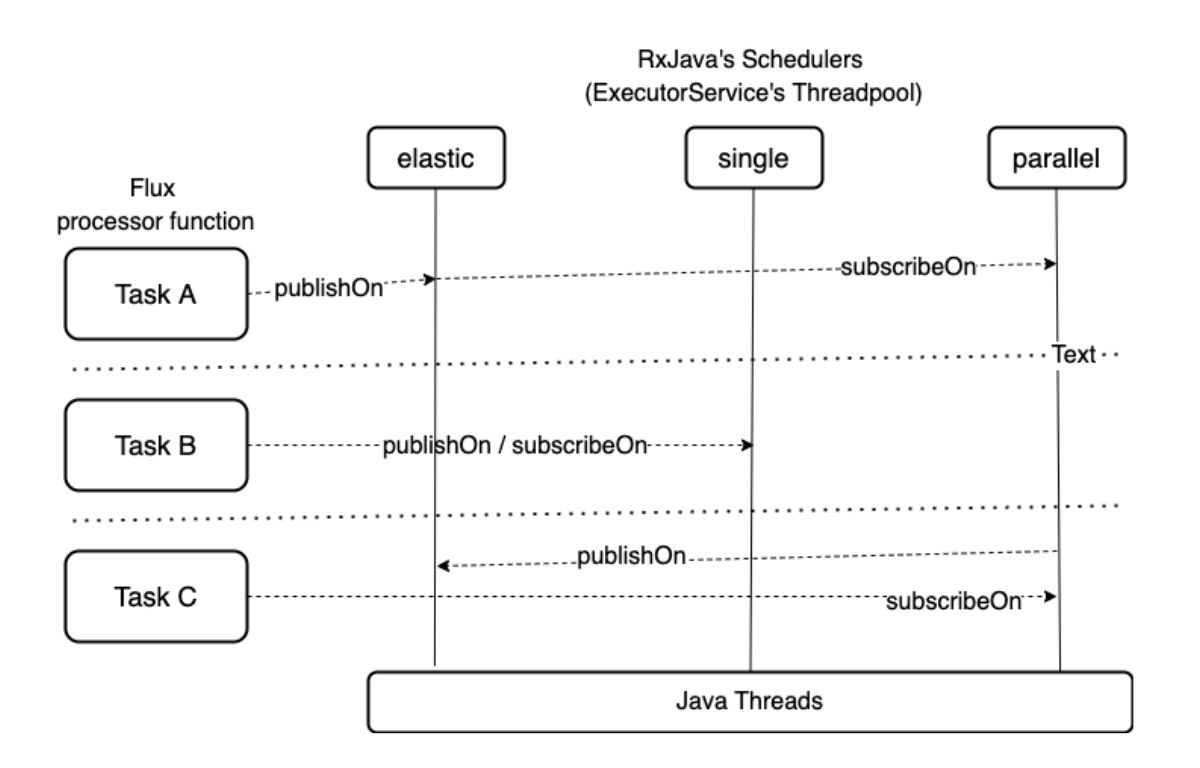

RxJava has various implementations of Schedulers like parallel, elastic, single, boundedElastic, immediate. These schedulers basically run the async task on the ExecutorService's threadpool which are implemented based to handle different use cases. For instance, parallel would use ExecutorService's fixed thread pool etc. and is recommended for computational tasks. But, if devs did not choose these schedulers wisely, can result in backpressure issue.

The Library provides methods like subscribeOn , publishOn and runOn to use signalling then publish (run) the task separately on different threads and when blocking operation task is complete, we can listen to the result on subscribed thread.

There is also a learning curve in writing the clean code. Error logging in RxJava would have huge stacktrace and is difficult to debug and narrow down the business logic error.

Again, with Java 19's Virtual Thread performance can be made much more efficient since they provide better preemption and concurrency.

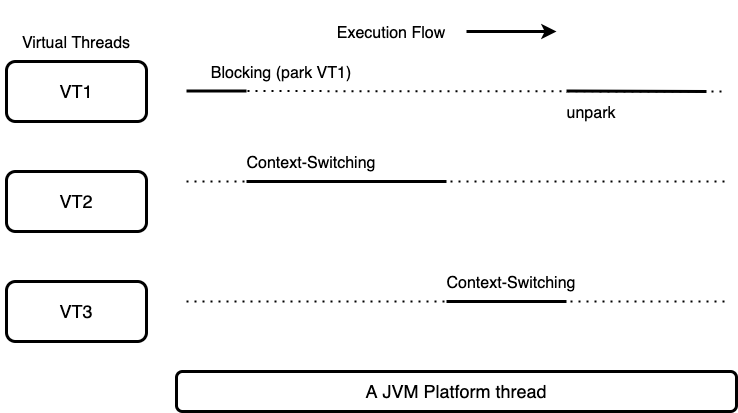

Originally Java's Green threads are the user-level thread that can be handled by users (devs) to simulate kernel-level multithreading. Future , CompleteableFuture , Thread Executors threadpools are already available in Java thread package to perform multithreading operations. With Java 19, these classic thread are now designated as platform threats while introducing a new lightweight threads called Virtual Threads.

These lightweight threads would use existing thread pool to perform execution of tasks. A dedicated ForkJoinPool FIFO model is used as Virtual Thread Scheduler. which will save the unloaded (unmounted) thread execution stack in heap, so that the execution can continue from the point of suspension.

In case, if the runnable code of Virtual Thread is blocking and there seem to be no way to reschedule, Virtual Threads would park (hold) the execution state and at this point the underlying thread would also be blocked. The execution stack of the thread would be saved be saved on JVM's heap.

Otherwise, the Virtual Threads would yield whenever a blocking operation is encountered and to enable this many base core Java Classes have been modified. It's really good to see that attempt been made to keep up the way of coding similar to the way it has been for older classical platform threads.

With great power comes great responsibility. Languages work their best for abstraction but using these new features also require learning about these schedulers well too.